To scale any databases beyond a limit it data has to be sharded ( Distributed ). MongoDB as a document store has inbuilt sharding.

1. Sharding:

Sharding is a method for distributing data across multiple machines. MongoDB uses sharding to support deployments with very large data sets and high throughput on read and write operations.

Database systems with large data sets or applications require very high throughput can challenge the capacity of a single server.

Scaling can be achieved by one of the following methods.

- Vertical Scaling

- Horizontal Scaling

Vertical Scaling:

- Vertical Scaling involves increasing the capacity of a single server. Adding additional CPU, Memory, Storage capacity and switching to high throughput Storage devices.

- It’s not always possible to increase beyond certain limitations.

- It’s not pocket friendly and time consuming process.

Horizontal Scaling:

- Horizontal Scaling involves dividing the system dataset and load over multiple servers, adding additional servers to increase capacity as required.

- We can utilise commodity servers to share the production traffic by sharing the load across multiple machines.

2. MongoDB Sharded Cluster:

- MongoDB supports horizontal scaling through MongoDB Sharded Cluster. MongoDB shards data at the collection level, distributing the collection data across the shards in the cluster.

- Sometimes the data within MongoDB will be so huge, that queries against such big data sets can cause a lot of CPU utilization on the server.

- To overcome this situation, MongoDB has a concept of Sharding, which is basically the splitting of data sets across multiple MongoDB instances.

- The collection which could be large in size is actually split across multiple collections or Shards as they are called. Logically all the shards work as one collection.

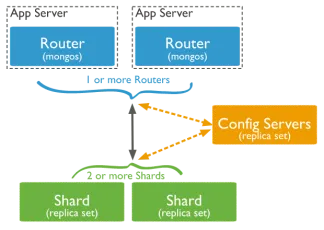

The following graphic describes the interaction of components within a sharded cluster:

MongoDB Sharded Cluster Architecture:

Advantages:

- Data will be split across multiple machines by using shared key, It’s possible to distribute and access the data geographically.

- Application will have less overhead on accessing the data as they will be working on it’s own set of data.

- There is no single point of failure. Higher durability can be achieved by each shards as replica sets.

- It’s possible to add or remove servers as per our requirement and balance the data.

- Maintenance of data is easier than handling it a single servers.

2.1. Components:

A MongoDB Sharded Cluster consists of the following components:

- Shard

- Mongos

- Config servers

Shard:

- Each shard contains a subset of the sharded data. Each shard can be deployed as a replica set.

Mongos

- The mongos acts as a query router, providing an interface between client applications and the sharded cluster.

- Applications never connect or communicate directly with the shards.

- The mongos tracks what data is on which shard by caching the metadata from the config servers.

- The mongos uses the metadata to route operations from applications and clients to the mongod instances.

- The mongos receives responses from all shards, it merges the data and returns the result document.

Config servers

- Config servers store metadata and configuration settings for the cluster. As of MongoDB 3.4, config servers must be deployed as a replica set (CSRS).

- If your cluster has a single config server, then the config server is a single point of failure.

- If the config server is inaccessible, the cluster is not accessible.

- If you cannot recover the data on a config server, the cluster will be inoperable.

- Always use three config servers for production deployments

2.2. Shard Key:

In a MongoDB Sharded Cluster implementation, Data is shared across the multiple servers (shards) using the Shard Key (Basically a field on a collection). So choosing the right shard key is very important to maintain the data balance within the sharded cluster.

- Once a collection is sharded using a Shard Key, It’s not easily possible to change it, Which require lot of operation overhead.

- The field to be chosen as a Shard Key, should be indexed.

Mongo client has several helper commands for sharding. Those can be accessed by using sh tag.

mongo> sh.*

To shard a collection, You must specify the target collection and the shard key.

mongo> sh.shardCollection( namespace, key )

- The namespace parameter consists of .

- The key parameter consists of a document containing a field and the index traversal direction for that field.

3. Implementation:

In this blog, I am going to share the details about how to implement sharding in mongodb with data splitting into shards.

For this demo, I ‘ m going to spin 2 data shards with 2 node replica set, a config server as 2 node replica set, 1 mongos instances.

Shard 1 -> node 1 (192.168.33.31), node 2 (192.168.33.32)

Shard 2 -> node 3 (192.168.33.33), node 4 (192.168.33.34)

Config Server Replica Set -> node 5 (192.168.33.35), node 6 (192.168.33.36)

mongos -> node7 (192.168.33.37)

OS: Centos 7

MongoDB Version: 3.4

Step 1: Installing MongoDB: [ Perform It On All The Nodes ]

- Setup MongoDB repository.Create repo file /etc/yum.repos.d/mongodb-org-3.4.repo with the below contents[mongodb-org-3.4]

name=MongoDB Repository

baseurl=https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/3.4/x86_64/

gpgcheck=1

enabled=1

gpgkey=https://www.mongodb.org/static/pgp/server-3.4.asc - Install MongoDB

# sudo yum install -y mongodb-org

Step 2: Configure Shards:

- Setup Shard1: [ node 1, node 2 ]

- As i have outlined, Shard1 is going to be a 2 node replica set. We will name it as shard11.

- Edit the mongod config /etc/mongod.conf on node 1, node2 and add replica set name, cluster role.replication:

replSetName: “shard11”

sharding:

clusterRole: “shardsvr” - Start mongodb service# service mongod start

- Now we are going to configure node 1, node2 as replica set. Connect to any of the nodes node 1 or node 2 and perform the following commands.# mongo –host 192.168.33.31> > rs.initiate()

{

“info2” : “no configuration specified. Using a default configuration for the set”,

“me” : “192.168.33.31:27017”,

“ok” : 1

}

shard11:OTHER>

shard11:PRIMARY> rs.add(“192.168.33.32”)

{ “ok” : 1 }

- Now Shard1 is configured as 2 replica set, Replica set status can be monitored by using mongo command rs.status()

- Setup Shard2: [ node 3, node 4 ]

- Perform the similar steps as we have configured Shard 1, Only change is we need to choose different name for Shard replica set. We can name it as shard12.Mongod Config: /etc/mongod.confreplication:

replSetName: “shard12”

sharding:

clusterRole: “shardsvr”

Step 3: Configure Config Server: [ node 5, node 6 ]

We need to perform the similar steps as we have configured replica set for Shard, Except We will choosing different name “config11” for replica set, “configsvr” as cluster role.

Mongod Config: /etc/mongod.conf

replication:

replSetName: “config11”

sharding:

clusterRole: “configsvr”

Step 4: Configure mongos: [ node 7 ]

- As i have outlined, mongos is going to be a standalone node.

- Edit the mongod config /etc/mongod.conf on node 7 and add configDB details.

Sharding:

configDB: config11/192.168.33.35:27017,192.168.33.36:27017

- Start mongos service

# mongos –config /etc/mongod.conf

- Now login to mongos and perform following operations to initialize the shards.# mongo –host 192.168.33.37

- For mongodb version 3.4, You must run the below command

# mongos> db.adminCommand( { setFeatureCompatibilityVersion: “3.4” } )

{ “ok” : 1 }

- Now add the primary nodes of the shard1 & shard2

# mongos> sh.addShard( “shard22/192.168.33.33:27017” )

{ “shardAdded” : “shard22”, “ok” : 1 }

# mongos> sh.addShard( “shard11/192.168.33.31:27017” )

{ “shardAdded” : “shard11”, “ok” : 1 }

NOTE:

- If we failed to set setFeatureCompatibilityVersion: “3.4” we can’t able to addShard

# mongos> sh.addShard( “shard11/192.168.33.31:27011”)

{

“code” : 96,

“ok” : 0,

“errmsg” : “failed to run command { setFeatureCompatibilityVersion: \”3.4\” } when attempting to add shard shard11/192.168.33.31:27017,192.168.33.32:27017 :: caused by :: PrimarySteppedDown: Primary stepped down while waiting for replication. ”

}

Hope the basics of Mongodb Sharding is covered.

.svg)